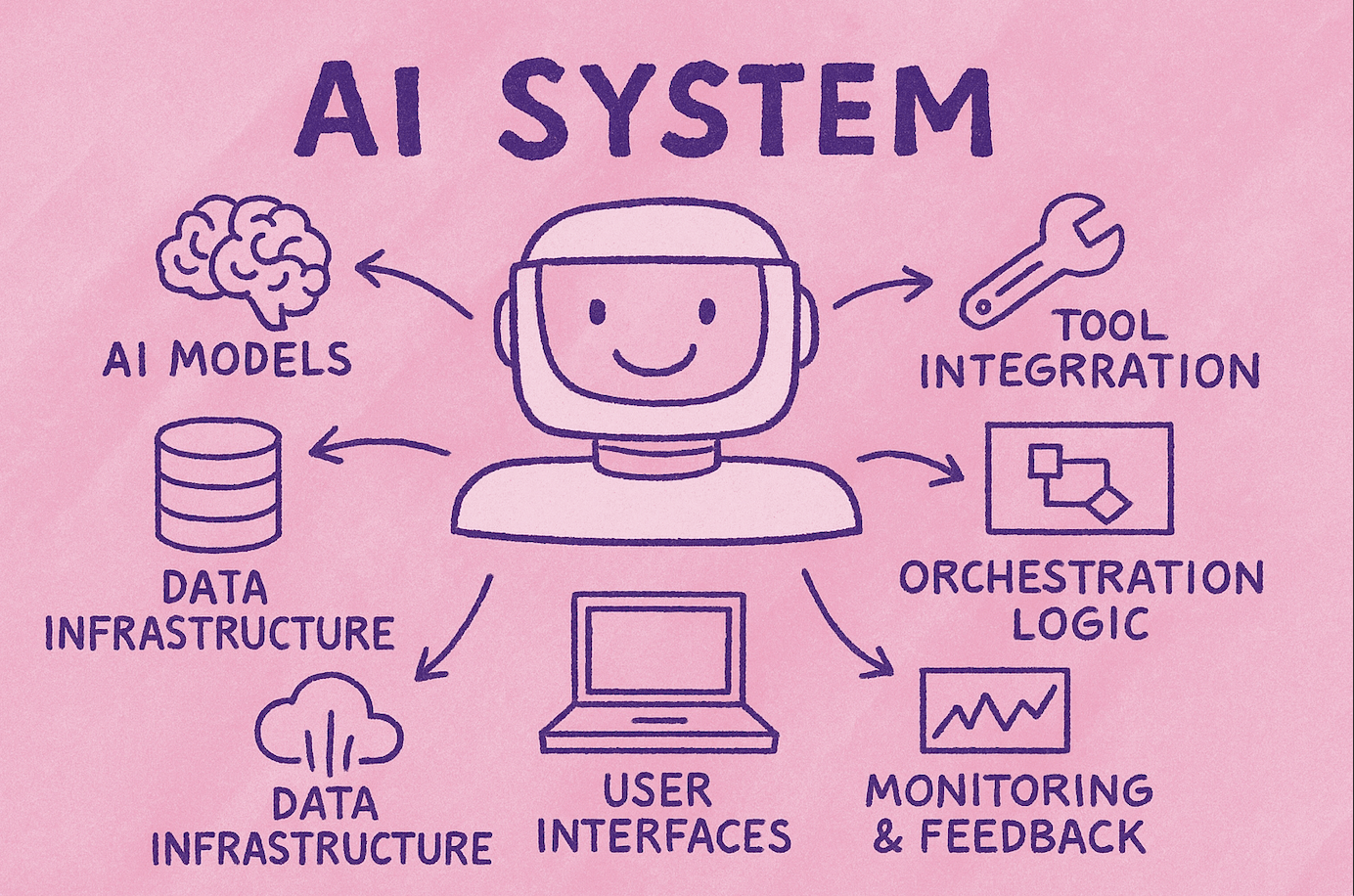

An AI system is the comprehensive integration of components that collectively deliver artificial intelligence-powered functionality. It combines the generative capabilities of LLM, the reasoning and decision-making processes of agents, the action-enabling capabilities of tools, and the reliability mechanisms of the orchestration layer. Additional infrastructure components, such as integration layers, plugin frameworks, or standardized protocols like the Model Context Protocol (MCP), enhance these systems’ portability, scalability, and interoperability.

Key characteristics

- Multi-layered – Built from distinct but cooperating parts (model, agent, tools, orchestration)

- Context-aware – Ingests prompts, environmental state, or external data when producing outputs

- Observable – Provides monitoring, debugging, and audit capabilities across all components

- Tool-augmented – Extends beyond text generation by integrating external capabilities

- Reliable – Uses orchestration to handle retries, workflows, and guardrails

- Interoperable – Can leverage protocols like MCP for standardized access to tools and resources

- Scalable – Supports many tools, agents, and services without exponential integration effort

An AI system represents the end-to-end assembly that enables reasoning, action, and reliability simultaneously.

AI system components

- LLM – The generative core component

- Agent – The reasoning and decision-making layer

- Tool – External capabilities that extend model functionality

- Integration layers – Custom connectors, plugin frameworks, or standardized protocols such as MCP

- Orchestration layer – Ensures workflows, retries, logging, and guardrails

Understanding relationships

AI system vs. subcomponents

- LLM ≠ AI system – A large language model generates text but lacks orchestration, memory, or tool access capabilities.

- AI agent ≠ AI system – An agent can reason, but without supporting infrastructure, it remains fragile and limited.

- Tool ≠ AI system – Tools provide specific capabilities but require agents to invoke and coordinate them.

- Orchestration ≠ AI system – Orchestration ensures reliability but does not reason or generate content.

Key distinction: An AI system represents the integration of model + agent + tools + orchestration (optionally enhanced by protocols like MCP) into a unified, goal-serving entity.

Examples

A customer support AI system demonstrates this integration by:

- Using an LLM to interpret user complaints and requests.

- Running an agent to determine whether to respond directly, search documentation, or escalate the issue.

- Invoking tools (such as knowledge base search or ticketing APIs) through custom connectors or MCP protocols.

- Relying on the orchestration layer to manage retries, apply compliance filters, and log all interactions.

From the user’s perspective, this appears as a seamless “AI system,” while the underlying architecture represents a carefully orchestrated collaboration of multiple specialized components.

Non-examples

- Standalone LLMs – These generate text but lack tools, orchestration, or integration capabilities.

- Single agents – These can reason and act, but without orchestration or supporting infrastructure they do not constitute a complete system.

- Individual tools – A database query API or mathematical engine alone does not qualify as an AI system.

- Isolated orchestration – Workflow automation or retry mechanisms without an agent and model do not meet the criteria.

- Traditional machine learning models – Classifiers, translators, or recommenders that produce outputs but lack autonomy, tool use, or system-level integration.

An AI system requires the integration of multiple components (LLM, agents, tools, orchestration, and supporting infrastructure). No single component alone constitutes a complete system.